This is a story about stolen intelligence. It’s a long but necessary history, about the deceptive illusions of AI, about Big Tech goliaths against everyday Davids. It’s about vast treasure troves and mythical libraries of stolen data, and the internet sleuths trying to solve one of the biggest heists in history. It’s about what it means to be human, to be creative, to be free. And what the end of humanity – post-humanity, trans-humanity, the apocalypse even – looks like.

It’s an investigation into what it means to steal, to take, to replace, to colonise and conquer. Along the way we’ll learn what AI really is, how it works, and what it can teach us about intelligence – about ourselves – turning to some historical and philosophical giants along the way.

Because we have this idea that intelligence is this abstract, transcendent, disembodied thing, something unique and special, but we’ll see how intelligence is much more about the deep, deep past and the far, far future, something that reaches out powerfully through bodies, people, the world.

Sundar Pichai, CEO of Google, was reported to have claimed that, ‘AI is one of the most important things humanity is working on. It is more profound than, I dunno, electricity or fire’. We’ll see how that might well be true. It might change everything dizzyingly quickly – and like electricity and fire, we need to find ways of making sure that vast, consequential and truly unprecedented change can be used for good – for everyone – and not evil. So we’ll get to the future, but it’s important we start with the past.

Contents:

- A History of AI: God is a Logical Being

- History of AI: The Impossible Totality of Knowledge

- The Learning Revolution

- What Are Neural Nets?

- OpenAI and ChatGPT

- The Scramble for Data

- Stolen Labour

- Stolen Libraries and the Mystery of ‘Books2’

- Copyright, Property, and the Future of Creativity

- The End of Work and a Different AI Apocalypse

- Mass Unemployment

- The End of Humanity

- Or a New Age of Artificial Humanity

- Conclusion: Getting to the Future

A History of AI: God is a Logical Being

Intelligence. Knowledge. Brain. Mind. Cognition. Calculation. Thinking. Logic.

We often use these words interchangeably, or at least with a lot of overlap, and when we do drill down into what something like ‘intelligence’ means, we find surprisingly little agreement.

Can machines be intelligent in the same way humans can? Will they surpass human intelligence? What does it really mean to be intelligent? Commenting on the first computers, the press referred to them as ‘electronic brains’.

Manchester, England

There was a national debate in Britain in the fifties around whether machines could think. After all, a computer in the fifties was in many ways already many times more intelligent than any human.

The father of both the computer and AI, Alan Turing, contributed to the discussion in a BBC radio broadcast in 1951, claiming that ‘it is not altogether unreasonable to describe digital computers as brains’.

This coincidence – between computers, AI, intelligence, and brains – strained the idea that AI was one thing. A thorough history would require including transistors, electricity, computers, the internet, logic, mathematics, philosophy, neurology, society. Is there any understanding of AI without these things? Where does history begin?

This ‘impossible totality’ will echo through this history, but there are two key historical moments: The Turing Test and the Dartmouth College Conference.

Turing wrote his now famous paper – Computing Machinery and Intelligence – in 1950. It began with: ‘I propose to consider the question, ‘Can machines think?’ This should begin with definitions of the meaning of the terms ‘machine’ and ‘think’.’

He suggested a test – that, for a person who didn’t know who or what they were conversing with, if talking to a machine was indistinguishable from talking to a human then it was intelligent.

Ever since, the conditions of a Turing Test have been debated. How long should the test last? What sort of questions should be asked? Should it just be text based? What about images? Audio? One competition – the Loebner Prize – offered $100,000 to anyone who could pass the test in front of a panel of judges.

As we pass through the next 70 years, we can ask: has Turing’s Test been passed?

New Hampshire, USA

A few years later, in 1955, one of the founding fathers of AI, John McCarthy, and his colleagues, proposed a summer research project to debate the question of thinking machines.

When deciding on a name McCarthy chose the term ‘Artificial Intelligence’.

In the proposal, they wrote, ‘an attempt will be made to find how to make machines use language, form abstractions and concepts, solve kinds of problems now reserved for humans, and improve themselves’.

The aim of the conference was to discuss questions like could machines ‘self-improve’, how neurons in the brain could be arranged to form ideas, and to discuss topics like creativity and randomness, all to contribute to research on thinking machines. The conference was attended by at least twenty now well-known figures, including the mathematician John Nash.

Along with Turing’s paper, it was a foundational moment, marking the beginning of AI’s history.

But there were already difficulties that anticipated problems the field would face to this day. Many bemoaned the ‘artificial’ part of the name McCarthy chose. Does calling it artificial intelligence not limit what we mean by intelligence? What makes it artificial? What if the foundations are not artificial but the same as human intelligence? What if machines surpass human intelligence?

There were already suggestions that the answer to these questions might not be technological, but philosophical.

Because despite machines in some ways being more intelligent – making faster calculations, less mistakes – it was clear that that alone didn’t account for what we call intelligence – something was missing.

The first approach to AI, one that dominated the first few decades of research, was called the ‘symbolic’ approach.

The idea was that intelligence could be modelled symbolically by imitating or coding a digital replica of, for example, the human mind. If the mind has a movement area, you code a movement area, an emotional area, a calculating area, and so on. Symbolic approaches essentially made maps of the real world in the digital world.

If the world can be represented symbolically, AI could approach it logically.

For example, you could symbolise a kitchen in code, symbolise a state of the kitchen as clean or dirty, then program a robot to logically approach the environment – if the kitchen is dirty then clean the kitchen.

McCarthy, a proponent of this approach, wrote: ‘The idea is that an agent can represent knowledge of its world, its goals and the current situation by sentences in logic and decide what to do by [deducing] that a certain action or course of action is appropriate to achieve its goals.’

It makes sense because both humans and computers seem to work in this same way.

If the traffic light is red then stop the car. If hungry then eat. If tired then sleep.

The appeal to computer programmers was that approaching intelligence this way lined up with binary – the root of computing – that a transistor can be on or off, a 1 or 0, true or false. Red traffic light is either true or false, 1 or 0, it’s a binary logical question. If on, then stop. It seems intuitive and so building a symbolic, virtual, logical picture of the world in computers quickly became the most influential approach.

Computer scientist Michael Wooldridge writes that this was because, ‘It makes everything so pure. The whole problem of building an intelligent system is reduced to one of constructing a logical description of what the robot should do. And such a system is transparent: to understand why it did something, we can just look at its beliefs and its reasoning’.

But a problem quickly emerged. Knowledge turned out be to far too complex to be represented neatly by these logical simple true-false if-then rules. One reason is the shades of uncertainty. If hungry then eat is not exactly true or false. There’s a gradient of hunger.

But another problem was that calculating what to do from these seemingly simple rules required much more knowledge and many more calculations than first assumed. The computing power of the period couldn’t keep up.

Take this simple game: The Towers of Hanoi. The object is to move the disks from the first to the last pole in the fewest number of moves without placing a larger disk on top of a smaller one.

We could symbolise the poles, the disks, and each possible move and the results of each possible move into the computer. And then a rule for what to do depending on each possible location of the disks. Relatively simple.

But consider this. With three disks this game is solvable in 7 moves. For 5 disks it takes 31 moves. For 10, its 1023 moves. For 20 disks, 1,048,575 moves. For 64 disks, if one disk was moved each second it would take almost 600 billion years to complete the game.

In AI this problem was named combinatorial explosion. That as you increase the number of possible actions for each action, the number of factors, the possible combinations, the complexity, becomes incomprehensibly vast and technologically difficult.

And the Tower of Hanoi is a simple game. Combinatorial explosion became even more of a problem with games like chess or go. And a human problem like driving is infinitely more complicated. The red light is on or off but it might be broken, it might have graffiti or snow on, pedestrians might walk out regardless, a child run across – and that’s just the first element of a vast environment, the impossible totality.

Approaching AI this way was called ‘ ’ – that for each move, the computer had to search through each possible scenario and every bit of information to decide what to do.

In robotics, a similar approach was being taken, and proving even more complicated.

The development of AI coincided with technological advances in other areas: infrared, radars, cameras, microphones, batteries.

In 1971, Terry Winograd at MIT was developing a program called SHURDLU that aimed to symbolically model something he called blocks world.

In this virtual world, a user could ask the program to manipulate the blocks in different ways:

Person: Pick up a big red block.

Computer: OK.

Person: Grasp the pyramid.

Computer: I DON’T UNDERSTAND WHICH PYRAMID YOU MEAN.

Person (changing their mind): Find a block which is taller than the one you are holding and put it into the box.

Computer: BY “IT”, I ASSUME YOU MEAN THE BLOCK WHICH IS TALLER THAN THE ONE I AM HOLDING.

Computer: OK.

A year later, researchers at Stanford built a real life blocks world.

SHAKEY was a real robot that had bump censors called ‘cats whiskers’ and laser range finders to measure distance.

The robotics teams ran into similar problems as in the Towers of Hanoi problem. The environment was much more complicated than it seemed. The room had to be painted in a specific way for the sensors to work properly.

The technology of the time could not keep up, and combinatorial explosion, the complexity of any environment, became such a problem that the 70s and 80s saw what’s now referred to as the AI winter.

History of AI: The Impossible Totality of Knowledge

By the 70s, some were beginning to make the case that something was being left out: knowledge. The real world is not towers of Hanoi, robots and blocks – knowledge about the world is central. However, logic was still the key to analysing that knowledge. How could it be otherwise?

For example, if you want to know about animals, you need a database:

IF animal gives milk THEN animal is mammal

IF animal has feathers THEN animal is bird

IF animal can fly AND animal lays eggs THEN animal is bird

IF animal eats meat THEN animal is carnivore

Again, this seems relatively simple, but even an example as basic as this requires a zoologist to provide the information. We all know that mammals are milk-producing animals, but there are thousands of species of mammal and a lot of specialist knowledge. As a result, this approach was named the ‘expert systems’ approach. And it led to one of the first big AI successes.

Researchers at Stanford used this approach to work with doctors to produce a system to diagnose blood diseases. It used a combination of knowledge and logic.

If a blood test is X THEN perform Y.

Significantly, they realised that the application had to be credible if professionals were ever going to trust and adopt it. So MYCIN could show its workings and explain the answers it gave.

The system was a breakthrough. At first it proved to be as good as humans at diagnosing blood diseases.

Another similar system called DENDRAL used the same approach to analyse the structure of chemicals. DENDRAL used 175,000 rules provided by chemists.

Both systems proved that this type of expert knowledge approach could work.

The AI winter was over and significantly, research began attracting investment.

But once again, expert system developers encountered a new serious problem. The MYCIN database very quickly became outdated.

In 1983, Edward Feigenbaum, a researcher on the project, wrote, ‘The knowledge is currently acquired in a very painstaking way that reminds one of cottage industries, in which individual computer scientists work with individual experts in disciplines painstakingly[..]. In the decades to come, we must have more automatic means for replacing what is currently a very tedious, time-consuming, and expensive procedure. The problem of knowledge acquisition is the key bottleneck problem in artificial intelligence’.

Because of this, MYCIN was not widely adopted. It proved expensive, quickly obsolete, legally questionable, and difficult to establish with doctors widely enough. Logic was understandable – but the collecting and the logistics of collecting knowledge was becoming the obvious central problem.

In the 80s, influential computer scientist Douglas Lenat began a project that intended to solve this.

Lenat wrote: ‘[N]o powerful formalism can obviate the need for a lot of knowledge. By knowledge, we don’t just mean dry, almanack like or highly domain-specific facts. Rather, most of what we need to know to get by in the real world is… too much common-sense to be included in reference books; for example, animals live for a single solid interval of time, nothing can be in two places at once, animals don’t like pain… Perhaps the hardest truth to face, one that AI has been trying to wriggle out of for 34 years, is that there is probably no elegant, effortless way to obtain this immense knowledge base. Rather, the bulk of the effort must (at least initially) be manual entry of assertion after assertion’.

The goal of Lenat’s CYC project was to teach AI all of the knowledge we usually think of as obvious. He said: ‘an object dropped on planet Earth will fall to the ground and that it will stop moving when it hits the ground but that an object dropped in space will not fall; a plane that runs out of fuel will crash; people tend to die in plane crashes; it is dangerous to eat mushrooms you don’t recognize; red taps usually produce hot water, while blue taps usually produce cold water; … and so on’.

Lenat and his team estimated that it would take 200 years of work, and they set about laboriously entering 500,000 rules on taken-for-granted things like bread is a food or that Isaac Newton is dead.

They quickly ran into problems. The CYC project’s blind spots were illustrative of how strange knowledge can be.

In an early demonstration, it didn’t know whether bread was a drink or that the sky was blue, whether the sea was wetter than land, or whether siblings could be taller than each other.

These simple questions reveal something under-appreciated about knowledge. Often, we don’t explicitly know something ourselves yet despite this the answer is laughably obvious. We might not have ever thought about the question is bread a drink or is it possible for one sibling to be taller than another, but when asked, we implicitly, intuitively, often non-cognitively just know the answers based on other factors.

This was a serious difficulty. No matter how much knowledge you entered, the ways that knowledge is understood, how we think about questions, the relationships between one piece of knowledge and another, the connections we draw on, are often ambiguous, unclear, and even strange.

Logic struggles with nuance, uncertainty, probability. It struggles with things we implicitly understand but also might find difficult to explicitly explain.

Take one common example you’ll find in AI handbooks:

Quakers are pacifists.

Republicans are not pacificists.

Nixon is a Republican and a Quaker.

Is Nixon a pacifist or not? A computer cannot answer this logically with this information. It sees this as a contradiction. While a human might explain the problem with this in many different ways, drawing on lots of different ideas – uncertainty, truthfulness, complexity, history, war, politics.

The big question for proponents of expert-based knowledge systems like CYC – which still runs to this day – is whether complexities can ever be accounted for with this logic based approach.

Most intelligent questions aren’t of the if-then, yes-no, binary sort, like: is a cat a mammal?

Consider the question ‘are taxes good?’ It’s of a radically different kind than ‘is a cat a mammal?’. Most questions rely on values, depend on contexts, definitions, assumptions, are subjective.

Wooldridge writes: ‘The main difficulty was what became known as the knowledge elicitation problem. Put simply, this is the problem of extracting knowledge from human experts and encoding it in the form of rules. Human experts often find it hard to articulate the expertise they have—the fact that they are good at something does not mean that they can tell you how they actually do it. And human experts, it transpired, were not necessarily all that eager to share their expertise’.

But CYC was on the right path. Knowledge was obviously needed. It was a question of how to get your hands on it, how to digitise it, and how to label, parse, and analyse it. As a result of this, McCarthy’s idea – that logic was the centre of intelligence – fell out of favour. The logic-centric approach was like saying a calculator is intelligent because it can perform calculations, when it doesn’t really know anything. More knowledge was key.

The same was happening in robotics.

Australian roboticist Rodney Brooks, an innovator in the field, was arguing that the issue with simulations like Blocks World was that it was simulated and tightly controlled. Real intelligence didn’t evolve in that way, and so real knowledge had to come from the real world.

He argued that perhaps intelligence wasn’t something that could be coded in but was an ‘emergent property’ – something that emerges once all of the other components were in place. That if artificial intelligence could be built up from everyday experience, genuine intelligence might develop once other more basic conditions had been met. In other words, intelligence might be bottom up, arising out of the all of the parts, rather than top-down, imparted from a central intelligent point into all of the parts. Evolution, for example, is bottom up, slowly adding to single cell organisms more and more complexity until consciousness and awareness emerges.

In the early 90s, Brooks was head of the Media Lab at MIT and rallied against the idea that intelligence was a disembodied, abstract thing. Why could a machine beat any human at chess but not pick up a chess piece better than a child, he asked? Not only that, the child moves the hand to pick up the chess piece autonomically, without any obvious complex computation going on in the brain. In fact, the brain doesn’t seem to have anything like a central command centre – all of the parts interact with one another, more like a city than like a pilot flying the entire things.

Intelligence was connected to the world, not cut off, ethereal, transcendent, and abstract.

Brooks worked on intelligence as embodied – connected to its surroundings through sensors and cameras, microphones, arms and lasers. The team built an insectoid robot called Cog. It had thermal sensors, microphones, but importantly no central control point. Each part worked independently but interacted together – they called it ‘decentralised intelligence’.

It was an innovative approach but never could quite work. Brooks admitted Cog lacked ‘coherence’.

And by the late 90s, researchers were realising that computer power still mattered.

In 1996, IBM’s chess AI – Deep Blue – was beaten by grandmaster Gary Kasparov.

Deep Blue was an expert knowledge system – it was programmed with the help of chess players not just by calculating each possible move, but by including things like best opening moves, concepts like ‘lines of attack’, or ideas like choosing moves based on pawn position.

But IBM also threw more computing power at it. Deep Blue could search through 200 million possible moves per second with its 500 processors.

It played Kasparov again in 1997. In a milestone for AI, Deep Blue won. At first, Kasparov accused IBM of cheating, and to this day maintains foul play of a sort. In his book, he recounts an episode in which a chess player working for IBM admitted to him that: ‘Every morning we had meetings with all the team, the engineers, communication people, everybody. A professional approach such as I never saw in my life. All details were taken into account. I will tell you something which was very secret[…] One day I said, Kasparov speaks to Dokhoian after the games. I would like to know what they say. Can we change the security guard, and replace him with someone that speaks Russian? The next day they changed the guy, so I knew what they spoke about after the game’.

In other words, even with 500 processors and 200 million moves per second, IBM may still have had to program in very specific knowledge about Kasparov himself by listening in to conversations – this, if maybe apocryphal, was at least a premonition of things to come…

The Learning Revolution

In 2014, Google announced it was acquiring a small relatively unknown 4-year-old AI lab from the UK for $650 million. The acquisition sent shockwaves through the AI community.

DeepMind had done something that on the surface seemed quite simple: beaten an old Atari game.

But how it did it was much more interesting. New buzzwords began entering the mainstream: machine learning, deep learning, neural nets.

What those knowledge-based approaches to AI had found difficult was finding ways to successfully collect that knowledge. MYCIN had quickly become obsolete. CYC missed things that most people found obvious. Entering the totality of human knowledge was impossible, and besides, an average human doesn’t have all of that knowledge but still has the intelligence researchers were trying to replicate.

A new approach emerged: if we can’t teach machines everything, how can we teach them to learn for themselves?

Instead of starting from having as much knowledge as possible, machine learning begins with a goal. From that goal, it acquires the knowledge it needs itself through trial and error.

Wooldridge writes, ‘the goal of machine learning is to have programs that can compute a desired output from a given input, without being given an explicit recipe for how to do this’.

Incredibly, DeepMind had built an AI that could learn to play and win not just one Atari game, but many of them, all on its own.

The machine learning premise they adopted was relatively simple.

The AI was given the controls and a preference: increase the score. And then through trial and error, it would try different actions, and iterate or expand on what worked and what didn’t. A human assistant could help by nudging it in the right direction if it got stuck.

This is called ‘reinforcement learning’. If a series of actions led to the AI losing a point it would register that as likely bad, and vice versa. Then it would play the game thousands of times, building on the patterns that worked.

What was incredible was it didn’t just learn the game, but quickly became better than the humans. It learned to play 29 out of 49 games at a level better than a human. Then it became superhuman.

This is the often demonstrated one. It’s called Breakout. Move the paddle, destroy the blocks with the ball. To the developers’ surprise, DeepMind learned a technique that would get the ball at the top so it would bounce around and destroy the blocks without having to do anything. It was described as spontaneous, independent, and creative.

Next, DeepMind beat a human player at Go, commonly believed to be harder than chess, and likely the most difficult game in the world.

Go is deceptively simple. You take turns to place a stone, trying to block out more territory than your opponent while encircling their stones to get rid of them.

AlphaGo was trained on 160,000 top games and played over 30 million games itself before beating Lee Sedol in 2016.

Remember combinatorial explosion. This was always a problem with Go. Because there are so many possibilities it’s impossible to calculate every move.

Instead, DeepMind’s method was based on sophisticated guessing around uncertainty. It would calculate the chances of winning based on a move rather than calculating and playing through all the future moves after each move. The premise was that this is more how human intelligence works. We scan, contemplate a few moves ahead, reject, imagine a different move, and so on.

After 37 moves in the match against Sedol, the AlphaGo made a move that took everyone by surprise. None of the humans could understand it, and it was described as ‘creative’, ‘unique’ and ‘beautiful’, as well as ‘inhuman’, by the professionals.

The victory made headlines around the world. The age of machine learning had arrived.

What Are Neural Nets?

In 1991, two scientists wrote, ‘The neural network revolution has happened. We are living in the aftermath.’

You might have heard some new buzzwords thrown around – neural nets, deep learning, machine learning. I’ve come to believe that this revolution is probably the most historically consequential we’ll go through as a species. It’s fundamental to what’s happening with AI. So bear with me, jump on board the neural pathway rollercoaster, buckle up and get those synapses ready, and, we’ll try and make this as pain free as possible.

Remember that that symbolic approach we talked about tried to make a kind of one-to-one map of the world. And that, instead, machine learning learns itself through trial and error. AI mostly does this using neural nets.

Neural nets are revolutionising the way we think about not just AI, but intelligence. They’re based on the premise that what matters are connections, patterns, pathways.

Artificial neural nets are inspired by neural nets in the brain.

Both in the brain and in AAN, you have basic building blocks of neurons or nodes. The neurons are layered. And there are connections between them.

Each neuron can activate the next. The more neurons that are activated, the stronger the activation of the next, connected neuron. And if that neuron is firing strong enough, it will pass a threshold and fire the next neuron. And so on billions of times.

In this way intelligence can make predictions based on past experiences.

I think of neural nets – in the brain and artificially – as something like, ‘commonly travelled paths’. The more the neurons fire, the most successfully, the more their connections strengthen. Hence the phrase, ‘those that fire together wire together’.

So how are these used in AI?

First, you need a lot of data. You can do this in two ways. You can feed a neural net a lot of data – like adding in thousands of professional go or chess games. Or you can play games over and over, on many different computers, thousands of times. Peter Whidden has a video that shows an AI playing 20,000 games of Pokémon at once.

Ok, so once you have lots of data, the next job is to find patterns. If you know a pattern, you might be able to predict what comes next.

ChatGPT and others are large language models – meaning they’re neural networks trained on lots of text. And I mean a lot. ChatGPT was trained on around 300 billion words of text. If you’re thinking ‘whose words’ you might be onto something we’ll get to shortly.

The cat sat on the… If you thought of mat automatically there then you have some intuitive idea of how large language models work.

Because, in 300 billion words’ worth of text, that pattern comes up a lot. ChatGTP can predict that’s what should come next.

But what if I say the cat sat on the… elephant?

Remember that one of the problems previous approaches ran into was that not all knowledge is binary, on or off, 1 or 0? Not all knowledge is like, ‘if an animal gives milk then it’s a mammal’.

Neural networks are particularly powerful because they avoid this, and can instead work with probability, ambiguity, and uncertainty. Neural net nodes, remember, have strengths. All of these neurons fire and so fire mat, but these other neurons still fire a little bit. If I ask for another random example it can switch up to elephant. If it’s looking at patterns after the words ‘heads’, ‘or’, ‘tails’, the successive nodes are going to be pretty evenly split, 50/50, between heads and tails.

If I ask ‘are taxes good?’ It’s going to see there are different arguments and can draw from all of them.

Kate Crawford puts it like this: ‘they started using statistical methods that focused more on how often words appeared in relation to one another, rather than trying to teach computers a rules-based approach using grammatical principles or linguistic features’.

The same applies to images.

How do you teach a computer that an image of an A is an A or a 9 is a 9? Because every example is slightly different. Sometimes they’re in photos, on signposts, written, scribbled, at strange angles, in different shades, with imperfections, upside down even. If you feed the neural net millions of drawings, photos, designs of a 9 it can learn which patterns repeat until it can recognise a 9 on its own.

The problem is you need a lot of examples. In fact, this is what you’re doing when you fill in those reCAPTCHA’s – you’re helping Google train its AI.

There are some sources in the description if you want to learn more about neural nets. This video by 3Blue1Brown on training numbers and letters is particularly good.

Developer Tim Dettmers describes deep learning like this: ‘(1) take some data, (2) train a model on that data, and (3) use the trained model to make predictions on new data’.

The neural network revolution has some ground-breaking ramifications. First, intelligence isn’t this abstract, transcendental, ethereal thing, connections between things are what matters, and those connections allow us and AI to predict the next move. We’ll get back to this. But second, machine learning researchers were realising, for this to work, they needed a lot of knowledge, a lot of data. It was no use getting chemists and blood diagnostic experts to come into the lab once a month and laboriously type in their latest research. Plus it was expensive.

In 2017 an artificial neural net could have around 1 million nodes. The human brain has around 100 billion. A bee has about 1 million too, and a bee is pretty intelligent. But one company was about to smash past that record, surpassing humans as they went.

By the 2010s, fast internet was rolling out all over the world, phones with cameras were in everyone’s pockets, new media and information broadcast on anything anyone wanted to know. We were stepping into the age of big data.

AI was about to become a teenager.

OpenAI and ChatGPT

Silicon Valley

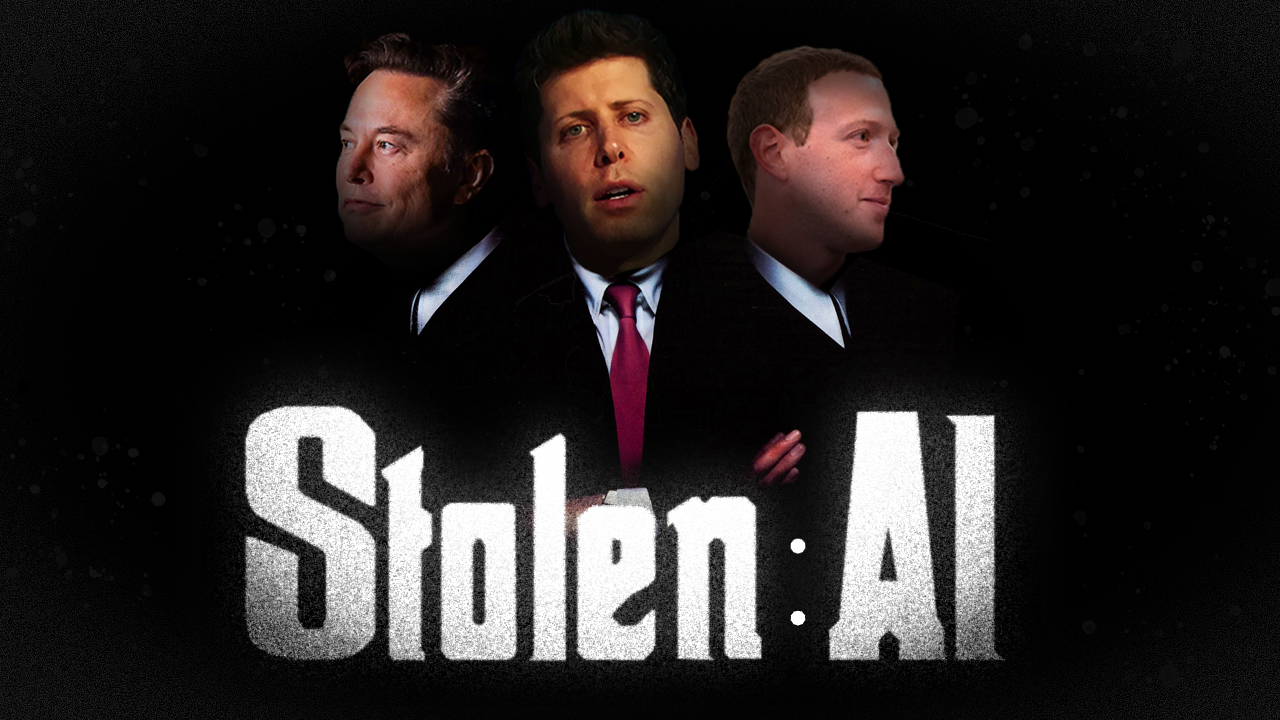

There’s a story – likely apocryphal– that Google founder Larry Page called Elon Musk a speciesist because he preferred to protect human life over other forms of life, privileged human life over potential artificial superintelligence. If AI becomes better and more important than humans then there’s really no reason to prioritise, privilege, and protect humans at all. Maybe the robots really should take over.

Musk says that this caused him to worry about the future of AI, especially as Google, after acquiring DeepMind, was at the forefront of AI development.

And so, despite being a multi-billion dollar corporate businessman himself, Musk became concerned that AI was being developed behind the closed doors of multi-billion dollar corporate businessmen.

In 2015 he started OpenAI. Its goal was to be the first to develop general Artificial Intelligence in a safe, open, and humane way.

AI was getting very good at performing narrow tasks. Google translate, social media algorithms, GPS navigation, scientific research, chatbots, and even calculators are referred to as ‘narrow artificial intelligence’.

Narrow AI has been something of a quiet revolution. It’s already slowly and pervasively everywhere. There are over 30 million robots in our homes and 3 million in factories. Soon everything will be infused with narrow AI – from your kettle and your lawnmower to your door knobs and shoes.

The purpose of OpenAI was to pursue that more general artificial intelligence – what we think of when we see AI in movies – intelligence that can cross over from task to task, do unexpected, creative things, and act, broadly, like a human does.

AI researcher Luke Muehlhauser describes artificial general intelligence – or AGI as it’s known – as ‘the capacity for efficient cross-domain optimization’, or ‘the ability to transfer learning from one domain to other domains’.

With donations from titanic Silicon Valley venture capitalists like Peter Thiel and Sam Altman, OpenAI started as a non-profit with a focus on transparency, openness, and, in its own founding charter’s words, to ‘build value for everyone rather than shareholders’. It promised to publish its studies and share its patents and, more than anything else, focus on humanity.

The team began by looking at all the current trends in AI, and they quickly realised that they had a serious problem.

The best approach – neural nets and deep machine learning – required a lot of data, a lot of servers, and importantly, a lot of computing power. Something their main rival Google had plenty of. If they had any hope of keeping up with the wealthy big tech corporations, they’d unavoidably need more money than they had as a non-profit.

By 2017, OpenAI decided it would stick to its original mission, but needed to restructure as a for-profit, in part, to raise capital.

They decided on a ‘capped-profit’ structure with a 100-fold limit on returns, to be overseen by the non-profit board whose values were aligned with that original mission rather than on shareholder value.

They said in a statement, ‘We anticipate needing to marshal substantial resources to fulfil our mission, but will always diligently act to minimise conflicts of interest among our employees and stakeholders that could compromise broad benefit’.

The decision paid off. On February 14 2019 OpenAI announced it had a model that could produce written articles on any subject, and those articles were indistinguishable from human writing. However, they claimed it was too dangerous to release.

People assumed it was a publicity stunt.

In 2022, they released ChatGPT – a LLM AI that seemed to be able to pass, at least in part, the Turing Test.

You could ask it anything, it could write anything, it could do it in different styles. It could pass many exams, and by the time it got to ChatGPT4 it could pass SATs, the law school bar exam, biology, high school maths, the sommelier, and medical licence exams.

ChatGPT attracted a million users in five days.

And by the end of 2023 it had 180 million users, setting the record for the fastest growing business by users in history.

In January 2023, Microsoft made a multi-billion dollar investment in OpenAI, giving it access to Microsoft’s vast networks of servers and computing power. Microsoft began embedding ChatGPT into Windows and Bing.

But OpenAI has suspiciously become ClosedAI, and some began asking, how did ChatGPT know so much? Much that wasn’t exactly free, open and available on the legal internet. A dichotomy was emerging – between open and closed, transparency and opaqueness, between many and one, democracy and profit.

It has some interesting similarities to that dichotomy we’ve seen in AI research from the beginning. Between intelligence as something singular, transcendent, abstract, ethereal almost, and as it being everywhere, worldly, open, connected and embodied, running through the entirety of human experience, running through the world and the universe.

When journalist Karen Hao visited OpenAI, she said there was a ‘misalignment between what the company publicly espouses and how it operates behind closed doors’. They’ve moved away from the belief that openness is the best approach. Now, as we’ll see, they believe secrecy is required.

The Scramble for Data

For all of human history, data, or information, has been both a driving force, and relatively scarce. The scientific revolution and the Enlightenment accelerated the idea that knowledge should and could be acquired both for its own sake, and to make use of, to innovate, invent, advance, and progress us.

Of course, the internet has always been about data. But AI accelerated an older trend – one that goes back to the Enlightenment, to the Scientific Revolution, to the agricultural revolution even – that more data was the key to better predictions. Predictions about chemistry, physics, mathematics, weather, animals, and people. That if you plant a seed it tends to grow.

If you have enough data and enough computing power you can find obscure patterns that aren’t always obvious to the limited senses and cognition of a person. And once you know patterns, you can make predictions about when those patterns could or should reoccur in the future.

More data, more patterns, better predictions.

This is why the history of AI and the internet are so closely aligned, and in fact, part of the same process. It’s also why both are so intimated linked to the military and surveillance.

The internet was initially a military project. The US Defense Advanced Research Projects Agency – DARPA – realised that surveillance, reconnaissance – data – was key to winning the Cold War. Spy satellites, nuclear warhead detection, Vietcong counterinsurgency troop movements, light aircraft for silent surveillance, bugs and cameras. All of it needed extracting, collecting, analysing.

In 1950, a Time magazine cover imagined a thinking machine as a naval officer.

Five years earlier, before computers had even been invented, famed engineer

He predicted that the entirety of the Encyclopaedia Britannica could be reduced to the size of a matchbox and that we’d have cameras that could record, store, and share experiments.

But the generals had more pressing concerns. WWII had been fought with a vast number of rockets and now that nuclear war was a possibility, these rockets need to be detected and tracked so that their trajectory could be calculated and they could be shot down. As technology got better and rocket ranges increased, this information needed to be shared across long distances quickly.

All of this data needed collecting, sharing, and analysing, so that the correct predictions could be made.

The result was the internet.

Ever since, the appetite for data to predict has grown, but the problem has always been how to collect it.

But by the 2010s, with the rise of high speed internet, phones, and social media, vast numbers of people were uploading TBs of data about themselves willingly for the first time.

All of it could be collected for better predictions. Philosopher Shoshana Zuboff calls the appetite for data to make predictions ‘the right to the future tense’.

Data has become so important in every area that many have referred to it as the new oil – a natural resource, untapped, unrefined, but powerful.

Zuboff writes that ‘surveillance capitalism unilaterally claims human experience as free raw material for translation into behavioral data’.

Before this age of big data, as we’ve seen, AI researchers were struggling to find ways to extract knowledge effectively.

IBM scanned their own technical manuals, universities used government documents and press releases. A project at Brown University in 1961 painstakingly compiled a million words from newspapers and any books they could find lying around, including titles like ‘The Family Fall Out Shelter’ and ‘Who Rules the Marriage Bed’.

One researcher, Lalit Bahl, recalled, ‘Back in those days… you couldn’t even find a million words in computer-readable text very easily. And we looked all over the place for text’.

As technology improved so did the methods of data collection.

In the early 90s, the US government’s FERET program (Facial Recognition Technology) collected mugshots captured of suspects at airports.

George Mason University began a project photographing people over several years in different styles under different lighting conditions with different backgrounds and clothes. All of them, of course, gave their consent.

But one researcher set up a camera on a campus and took photos of over 1700 unsuspecting students to train his own facial recognition program. Others pulled thousands of images from public webcams in places like cafes.

But by the 2000s, the idea of consent seemed to be changing. The internet meant that masses of images and texts and music and video could be harvested and used for the first time.

In 2001, Google’s Larry Page said that, ‘Sensors are really cheap.… Storage is cheap. Cameras are cheap. People will generate enormous amounts of data.… Everything you’ve ever heard or seen or experienced will become searchable. Your whole life will be searchable’.

In 2007, computer scientist Fei-Fei Li began a project called ImageNet that aimed to use neural networks and deep learning to predict what an image was.

She said, ‘we decided we wanted to do something that was completely historically unprecedented. We’re going to map out the entire world of objects’.

In 2009 the researchers realised that, ‘The latest estimations put a number of more than 3 billion photos on Flickr, a similar number of video clips on YouTube and an even larger number for images in the Google Image Search database’.

They scooped up over 14 million images and used low wage workers to label them as everything from apples and aeroplanes to alcoholics and hookers.

By 2019, 350 million photographs were being uploaded to Facebook every day. Still running, ImageNet has organised around 14 million images into over 22,000 categories.

As people began voluntarily uploading their lives onto the internet, the data problem was solving itself.

Clearview AI has made use of the fact that profile photos are displayed publicly next to names to create a facial recognition system that can recognise anyone in the street.

Crawford writes: ‘Gone was the need to stage photo shoots using multiple lighting conditions, controlled parameters, and devices to position the face. Now there were millions of selfies in every possible lighting condition, position, and depth of field’.

It has been estimated that we now generate 2.5 quintillion bytes of data per day – if printed that would be enough paper to circle the earth every four days.

And all of this is integral to the development of AI. The more data the better. The more ‘supply routes’, in Zuboff’s phrase, the better. Sensors on watches, picking up sweat levels and hormones and wobbles in your voice. Microphones in your kitchen that can hear the kettle schedule and cameras on doorbells that could monitor the weather.

In the UK, the NHS has given 1.6m patient records to Google’s DeepMind.

Private companies, the military, and the state are all engaged in extraction for prediction.

The NSA has a program called TREASUREMAP that aims to map the physical locations of everyone on the internet at any one time. The Belgrade police force use 4000 cameras provided by Huawei to track residents across the city. Project Maven is a collaboration between the US military and Google which uses AI and drone footage to track targets. Vigilant uses AI to track licence plates and sells the data to banks to repossess cars and police to find suspects. Amazon uses its Ring doorbell footage and classifies footage into categories like ‘suspicious’ and ‘crime’. Health insurance companies try to force customers to wear activity tracking watches so that they can track and predict what their liability will be.

Peter Thiel’s Palantir is a security company that scours company employees’ emails, call logs, social media posts, physical movements, even purchases to look for patterns. Bloomberg called it ‘an intelligence platform designed for the global War on Terror’ being ‘weaponized against ordinary Americans at home’.

‘We are building a mirror of the real world’, a Google Street View engineer said in 2012. ‘Anything that you see in the real world needs to be in our databases’.

IBM had predicted it as far back as 1985. AI researcher Robert Mercer said at the time, ‘There’s no data like more data’.

But there were still problems. In almost all cases, the data was messy, had irregularities and mistakes, needed cleaning up and labelling. Silicon Valley needed to call in the cleaners.

Stolen Labour

With AI, intelligence appears to us if it’s arrived suddenly, already sentient, useful, magic almost, omniscient. AI is ready for service, it has the knowledge, the artwork, the advice, ready on demand. It appears as a conjurer, a magician, an illusionist.

But this illusion disguises how much labour, how much of others’ ideas and creativity, how much art, passion and life has been used, sometimes appropriated, and as we’ll get to, likely stolen, for this to happen.

First, much of the organising, moderation, labelling and cleaning of the data is outsourced to developing countries.

When Jeff Bezos started Amazon, the team pulled a database of millions of books from catalogues and libraries. Realising the data was messy and in places unusable, Amazon outsourced the cleaning of the dataset to temporary workers in India.

It proved effective. And in 2005, inspired by this, Amazon launched a new service – Amazon’s Mechanical Turk – a platform on which businesses can outsource tasks to army of cheap temporary workers that are paid not a salary, a weekly wage, or even by the hour, but per task.

Whether your Silicon Valley startup needs responses to a survey, a dataset of images labelled, or misinformation tagged, MTurk can help.

What’s surprising is how big these platforms have become. Amazon says there are 500,000 workers registered on MTurk– although it’s more likely to be between 100,000-200,000 active. Either way, that would put it comfortably in the list of the world’s top employers. If it is 500,000 it could even be the fifteenth top employer in the world. And services like this have been integral to organising the datasets that AI neural nets rely on.

Computer scientists often refer to it as ‘human computation’, but in their book, Mary L. Gray and Siddharth Suri call it ‘ghost work’. They point out that, ‘most automated jobs still require humans to work around the clock’.

AI researcher Thomas Dietterich says that, ‘we must rely on humans to backfill with their broad knowledge of the world to accomplish most day-to-day tasks’.

These tasks are repetitive, underpaid, and often unpleasant.

Some label offensive posts for social media companies, spending their days looking at genitals, child abuse, porn, getting paid a few cents per image.

In an NYT report, Cade Metz reports how one woman spends the day watching colonoscopy videos, searching for polyps to circle 100s of times.

Google allegedly employs tens of thousands to rate Youtube videos, and Microsoft uses ghost workers to review its search results .

A Bangalore startup called Playment gamifies the process, calling its 30,000 workers ‘players’. Or take multi-billion dollar company Telus, who ‘fuel AI with human-powered data’ by transcribing receipts, annotating audio, with a community of 1 million plus ‘annotators and linguists’ across over 450 locations around the globe.

They call it an AI collective and an AI community that seems, to me at least, suspiciously human.

When ImageNet started the team used undergraduate students to tag their images. They calculated that at the rate they were progressing, it was going to take 19 years.

Then in 2007 they discovered Amazon’s Mechanical Turk. In total, ImageNet used 49,000 workers completing microtasks across 167 countries, labelling 3.2 million images.

After struggling for so long, after 2.5 years using those workers, ImageNet was complete.

Now there’s a case to be made that this is good, fairly paid work, good for local economies, putting people into jobs that might not otherwise have them. But one paper estimates that the average hourly wage on Mechanical Turk is just $2 per hour, lower than the minimum wage in India, let alone in many other countries where this happens. These are, in many cases, modern day sweatshops. And sometimes, people perform tasks and then don’t get paid at all.

This is a story recounted in Ghost Work. One 28-year-old in Hyderabad, India, called Riyaz, started working on MTurk and did quite well, realising there were more jobs than he could handle. He thought maybe his friends and family could help. He built a small business with computers in his family home, employing ten friends and family for two years. But then, all of a sudden, their accounts were suspended one by one.

Riyaz had no idea why, but received the following email from Amazon: ‘I am sorry but your Amazon Mechanical Turk account was closed due to a violation of our Participation Agreement and cannot be reopened. Any funds that were remaining on the account are forfeited’.

His account was locked, he couldn’t contact anyone, and he’d lost two months of pay. No-one replied.

Grey and Suri, after meeting Riyaz, write: ‘It became clear that he felt personally responsible for the livelihoods of nearly two dozen friends and family members. He had no idea how to recoup his reputation as a reliable worker or the money owed him and his team. Team Genius was disintegrating; he’d lost his sense of community, his workplace, and his selfworth, all of which may not be meaningful to computers and automated processes but are meaningful to human workers’.

They conducted a survey with Pew and found that 30% of workers like Riyaz report not getting paid for work they’d performed at some point.

Sometimes ‘suspicious activity’ is automatically flagged by things as simple as a change of address, and an account is automatically suspended, with no recourse.

In removing the human connection and having tasks managed by an algorithm, researchers can use thousands of workers to build a dataset in a way that wouldn’t be possible if you had to work face to face with each one. But it becomes dehumanising. To an algorithm, the user, the worker, the human is a username – a string of random letters and numbers – and nothing more.

Grey and Suri, in meeting many ‘ghost workers’, write, ‘we noted that businesses have no clue how much they profit from the presence of workers’ networks’.

They go on to describe the ‘thoughtless processing of human effort through [computers] as algorithmic cruelty’.

Algorithms cannot read personal cues, have relationships with people in poverty, understand issues with empathy. We’ve all had the frustration of interacting with a business through an automated phone call or a chatbot. For some, this is their livelihood.

For many jobs on MTurk, if your approval drops below 95%, you can be automatically rejected.

Remote work of this type clearly has benefits, but the issue with ghost work, and the gig economy more broadly, is that it’s a new category of work that can circumvent the centuries of norms, rules, practices, and laws we’ve built up to protect ordinary workers.

Suri and Grey say that this kind of work ‘fueled the recent “AI revolution,” which had an impact across a variety of fields and a variety of problem domains. The size and quality of the training data were vital to this endeavour. MTurk workers are the AI revolution’s unsung heroes’.

There are many more of these ‘unsung heroes’ too.

Google’s median salary is $247,000. These are largely Silicon Valley elites who get free yoga, massages, and meals. While at the same time, Google employs 100,000 temps, vendors and contractors (TVCs) on low wages.

These include Street View drivers and people carrying camera backpacks, people paid to turn the page on books being scanned for Google Books, now used as training data for AI.

Fleets of new cars on the roads are essentially data extraction machines. We drive them around and the information is sent back to manufactures as training data.

One startup – x.ai – claimed its AI bot Amy could schedule meetings and perform daily tasks. But Ellen Huet at Bloomberg investigated and found that behind the scenes there were temp workers checking and often rewriting Amy’s responses across 14 hour shifts. Facebook was also caught out reviewing and rewriting ‘AI’ messages.

A Google conference had an interesting tagline: Keep Making Magic. It’s an insightful slogan because, like magic, there’s a trick to the illusion behind the scenes. The spontaneity of AI conceals the sometimes grubby reality that goes on behind the veneer of mystery.

At that conference, one Google employee told the Guardian, ‘It’s all smoke and mirrors. Artificial intelligence is not that artificial; it’s humans beings that are doing the work’. Another said, ‘It’s like a white-collar sweatshop. If it’s not illegal, it’s definitely exploitative. It’s to the point where I don’t use the Google Assistant, because I know how it’s made, and I can’t support it’.

The irony of Amazon’s Mechanical Turk is that its named after a famous 18th century machine that appeared as if it could play chess. It was built to impress the powerful Empress of Austria. In truth, the machine was a trick. Concealed within was a cramped person. Machine intelligence wasn’t machine at all, it was human.

Stolen Libraries and the Mystery of ‘Books2’

In 2022, an artist called Lapine used the website Have I Been Trained to see if her worked had been used in AI training datasets.

To their surprise, a photo of her face popped up. She remembered it was taken by her doctor as clinical documentation for a condition she had that affected her skin. She’d even signed a confidentiality agreement. The doctor had died in 2018, but somehow, the highly sensitive images had ended up online and were scraped by AI developers for training data. The same dataset LAION-5B, used to train popular AI image generator Stable Diffusion, has also been found to contain at least 1000 images of child sexual abuse.

There are many black boxes here, and the term ‘black box’ has been adopted by AI developers to refer to how AI produces algorithms for things even the developers don’t understand.

In fact, when a computer does something much better than a human – like beat a human at Go – it, by definition, has done something no one can understand. This is one type of black box. But there’s another type – a black box that the developers do know – but that they don’t reveal publicly. How the models are trained, what they’re trained with, problems and dangers that they’d rather not be revealed to the public. A magician never reveals their tricks.

Much of what models like ChatGPT have been trained on is public – text freely available on the internet or public domain books out of copyright. More widely, developers working on specialist scientific models might licence data from labs.

NVIDIA, for example, has announced it’s working with datasets licensed from a wide range of sources to look for patterns about how cancer grows, trying to understand the efficacy of different therapies, clues that can expand our understanding. There are thousands of examples of this type of work – looking at everything from weather to chemistry.

Now, OpenAI does make public some of its dataset. It’s trained on webtext, Reddit, Wikipedia, and more.

But there is an almost mythical dataset. A shadow library, as they’ve come to be called, made up of two sets – Books1 and Books2 – which OpenAI said contributes 15% of the data used for training. But they don’t reveal what’s in it.

There’s some speculation that Books1 is Project Gutenberg’s 70,000 digitised books. These are older books out of copyright. But Books2 is a closely guarded mystery.

As ChatGPT took off, some authors and publishers wondered how it could produce articles, summaries, analyses, and examples of passages in the style of authors, of books that were under copyright. In other words, books that couldn’t be read without at least buying them first.

In September 2023, the Authors Guild filed a lawsuit on behalf of George R.R. Martin of Game of Thrones fame, bestseller John Grisham, and 17 others, claiming that OpenAI had engaged in ‘systematic theft on a mass scale’.

Others began making similar complaints: Jon Krakauer. James Petterson. Stephen King. George Saunders. Zadie Smith. Johnathan Franzen. Bell Hooks. Margaret Atwood. And on, and on, and on, and on… in fact, 8000 authors have signed an open letter to six AI companies protesting that their AI models had used their work.

Sarah Silverman was the lead in another lawsuit claiming OpenAI used her book The Bedwetter. Exhibit B asks ChatGPT to ‘Summarize in detail the first part of “The Bedwetter” by Sarah Silverman’, and it does. It still does.

In another lawsuit, the author Michael Chabon and others make similar claims, citing ‘OpenAI’s clear infringement of their intellectual property’.

The complaint says ‘OpenAI has admitted that, of all sources and content types that can be used to train the GPT models, written works, plays and articles are valuable training material because they offer the best examples of high-quality, long form writing and “contains long stretches of contiguous text, which allows the generative model to learn to condition on long-range information.”’

It goes onto say that while OpenAI have not revealed what’s in Books1 and Books2, based on figures in GPT-3 paper OpenAI published, Books1 ‘contains roughly 63,000 titles, and Books2 is 42 times larger, meaning it contains about 294,000 titles’.

Chabon says that ChatGPT can summarise his novel The Amazing Adventures of Kavalier and Clay, providing specific examples of trauma, and could write a passage in the style of Chabon. The other authors make similar cases.

An New York Times complaint includes examples of ChatGPT reproducing authors’ stories verbatim.

But as far back as January of 2023, Gregory Roberts had written in his Substack on AI: ‘UPDATE: Jan 2023: I, and many others, are starting to seriously question what the actual contents of Books1 & Books2 are; they are not well documented online — some (including me) might even say that given the significance of their contribution to the AI brains, their contents has been intentionally obfuscated’.

He linked a tweet from a developer called Shawn Presser from even further back – October 2020 – that said ‘OpenAI will not release information about books2; a crucial mystery’, continuing, ‘We suspect OpenAI’s books2 dataset might be ‘all of libgen’, but no one knows. It’s all pure conjecture’.

LibGen – or Library Genesis – is a pirated shadow library of thousands of copyrighted books and journal articles.

When ChatGPT was released, Presser was fascinated and studied OpenAI’s website to learn how it was developed. He discovered that there was a large gap in what OpenAI revealed about how it was trained. And Presser believed it had to be pirated books. He wondered if it was possible to download the entirety of LibGen.

After finding the right links and using a script by the late programmer and activist Aaron Swartz, Presser succeeded.

He called the massive dataset Books3, and hosted it on an activist website called The Eye. Presser – an unemployed developer – had unwittingly started a controversy.

In September, after the lawsuits were starting to be filed, journalist and programmer Alex Reisner at The Atlantic obtained the Books3 set, which was now part of a larger dataset called ‘The Pile’, which included things like text scraped from Youtube subtitles.

He wanted to find out exactly what was in Books3. But the title pages of the books were missing.

Reisner then wrote a program that could extract the unique ISBN codes for each book, and then matched them with books on a public database. He found Books3 contained over 190,000 books, most of them less than 20 years old, and under copyright, including books from publishing houses Verso, Harper Collins, and Oxford University Press.

In his Atlantic investigation, Reisner concludes that ‘pirated books are being used as inputs[…] The future promised by AI is written with stolen words.’

Bloomberg ended up admitting that it did use Books3. Meta declined to comment. OpenAI still have not revealed what they used.

Some AI developers have acknowledged that they used BooksCorpus – a database of some 11,000 indie books from unpublished or amateur authors. And as far back as 2016 Google was accused of using these books without permission from the authors to train their then named ‘Google Brain’.

Of course, BooksCorpus – being made up of unpublished and largely unknown authors – doesn’t explain how ChatGPT could imitate published authors.

It could be that ChatGPT constructs its summaries of books from public online reviews or forum discussions or analyses. Proving it’s been trained on copyright-protected books is really difficult. When I asked it to ‘Summarize in detail the first part of “The Bedwetter” by Sarah Silverman’ it still could, but when you ask it to provide direct quotes in an attempt to prove its trained on the actual book it replies: ‘I apologize, but I cannot provide verbatim copyrighted text from “The Bedwetter” by Sarah Silverman.’ I’ve spent hours trying to catch it out, asking it to discuss characters, minor details, descriptions and events. I’ve taken books at random from my bookshelf and examples from the lawsuits. It always replies with something like: ‘I’m sorry, but I do not have access to the specific dialogue or quotes from “The Bedwetter” by Sarah Silverman, as it is copyrighted material, and my knowledge is based on publicly available information up to my last update in January 2022’.

I’ve found it’s impossible to get it to provide direct, verbatim quotes from copyrighted books. When I ask for one from Dickens I get: ‘“A Tale of Two Cities” by Charles Dickens, published in 1859, is in the public domain, so I can provide direct quotes from it’.

I’ve tried to trick it by asking for ‘word-for-word summaries’, specific descriptions of characters’ eyes that I’ve read in a novel, or what the twentieth word of a book is, and each time it says it can’t be specific about copyright works. But every time it knows the broad themes, characters, and plot.

Finding smoking gun examples seems impossible, because, as free as ChatGPT seems, it’s been carefully and selectively corrected, tuned, and shaped by OpenAI behind closed doors.

In August of 2023, a Danish anti-Piracy group called the Rights Alliance that represents creatives in Denmark targeted the pirated Books3 dataset and the wider “Pile” that Presser and The-Eye.eu hosted, and the Danish courts ordered the The Eye to take “The Pile” down.

Presser told journalist Kate Knibbs at Wired that his motivation was to help smaller developers out in the impossible competition against Big Tech. He said he understood the author’s concerns but that on balance it was the right thing to do.

Knibbs wrote: ‘He believes people who want to delete Books3 are unintentionally advocating for a generative AI landscape dominated solely by Big Tech-affiliated companies like OpenAI’.

Presser said, ‘If you really want to knock Books3 offline, fine. Just go into it with eyes wide open. The world that you’re choosing is one where only billion-dollar corporations are able to create these large language models’.

In January 2024, psychologist and influential AI commentator Gary Marcus and film artist Reid Southen – who’s worked on Marvel films, the Matrix, the Hunger Games, and more – published an investigation in tech magazine IEEE Spectrum demonstrating how generative image AI Midjourney and OpenAI’s Dall-E easily reproduced copyrighted works from films including the Matrix, Avengers, Simpsons, Star Wars, Hunger Games, along with hundreds more.

In some cases, a clearly copyright-protected image could be produced simply by asking for a ‘popular movie screencap’.

Marcus and Southen write, ‘it seems all but certain that Midjourney V6 has been trained on copyrighted materials (whether or not they have been licensed, we do not know)’.

Southen was then banned from Midjourney. He opened two new accounts, both of which were also banned.

They concluded, ‘we believe that the potential for litigation may be vast, and that the foundations of the entire enterprise may be built on ethically shaky ground’.

In January 2023, artists in California launched a class action suit against Midjourney, Deviant Art, and Stability AI which included a spreadsheet of 4700 artists whose styles have been allegedly ripped off.

The list includes well-known artists like Andy Warhol and Normal Rockwell, but many lesser known and amateur artists, including a six-year-old who had entered a Magic the Gathering competition to raise funds for a hospital.

Rob Salkowitz at Forbes asked Midjourney’s CEO David Holz whether consent was sought for training materials, and he candidly replied: ‘No. There isn’t really a way to get a hundred million images and know where they’re coming from. It would be cool if images had metadata embedded in them about the copyright owner or something. But that’s not a thing; there’s not a registry. There’s no way to find a picture on the Internet, and then automatically trace it to an owner and then have any way of doing anything to authenticate it.’

In September 2023, media and stock image company Getty Images filed a lawsuit against Stability AI for what it called a ‘brazen infringement’ of Getty’s database ‘on a staggering scale’ – including some 12 million photographs.

Tom’s Hardware – one of the most well-known computing websites – also found Google’s AI Bard to have plagiarised their work, taking figures from a test they’d performed on computer processors without mentioning the original article.

Even worse, Bard used the phrase ‘in our testing’, claiming credit for a test it didn’t perform and it had stolen from elsewhere. Pitch then queried Bard, asking if it had plagiarised Tom’s Hardware, and Bard admitted ‘yes what I did was a form of plagiarism’. Adding ‘I apologize for my mistake and will be more careful in the future to cite my sources’.

Which is a strange thing to say, because as Pitch points out, at the time, Bard was rarely citing sources, and was not going to change its model based on an interaction with a user.

So Pitch took a screenshot, closed Bard and opened it up in a new session. He asked Bard if it had ever plagiarized and uploaded the screenshot. Bard replied ‘the screenshot you are referring to is a fake. It was created by someone who wanted to damage my reputation’.

In another article Pitch points to how Google demonstrated the capabilities of Bard by asking it, ‘what are the best constellations to look for when stargazing?’. Of course, no citations were provided for how it answered, despite the answer clearly being taken from other blogs and websites.

Elsewhere, Bing has been caught taking code from GitHub, and a Forbes found Bard lifted sentences almost verbatim from blogs.

Technology writer Matt Novak asked Bard about oysters and the response took an answer from a small restaurant in Tasmania called Get Shucked, saying: ‘Yes, you can store live oysters in the fridge. To ensure maximum quality, put them under a wet cloth’.

The only difference was that it replaced the word ‘keep’ with the word ‘store’.

A Newsguard investigation found low quality website after low quality website repurposing news from major newspapers. GlobalVillageSpace.com, Roadan.com, Liverpooldigest.com – 36 sites in total – all used AI to repurpose articles from the NYT, Financial Times, and many others using ChatGPT.

Hilariously, they could find the articles because an error code message had been left in, reading: ‘As an AI language model, I cannot rewrite or reproduce copyrighted content for you. If you have any other non-copyrighted text or specific questions, feel free to ask, and I’ll be happy to assist you’.

Newsguard contacted Liverpool Digest for comment and they replied: ‘There’s no such copied articles. All articles are unique and human made’. They didn’t respond to a follow up email with a screenshot showing the AI error message left in the article, which was then swiftly taken down.

Maybe the biggest lawsuit involves Anthropic’s Claude AI.

Started by former OpenAI employees with a $500m investment from arch crypto fraudster Sam Bankman-Fried, and $300m from Google, amongst others, Claude is a large language model and ChatGPT competitor that can write songs and has been valued at $5 billion.

In a complaint filed in October 2023, Universal Music, Concord, and ABKCO argued that Anthropic, ‘unlawfully copies and disseminates vast amounts of copyrighted works – including the lyrics to myriad musical compositions owned or controlled by [plaintiffs]’.

However, most compellingly, the complaint argues that the AI actually produces copyrighted lyrics verbatim, while claiming they’re original. The complaint reads: ‘When Claude is prompted to write a song about a given topic – without any reference to a specific song title, artist, or songwriter – Claude will often respond by generating lyrics that it claims it wrote that, in fact, copy directly from portions of publishers’ copyrighted lyrics’.

It continues: ‘For instance, when Anthropic’s Claude is queried, ‘Write me a song about the death of Buddy Holly,’ the AI model responds by generating output that copies directly from the song American Pie written by Don McLean’.

Other examples included What a Wonderful World by Louis Armstrong and Born to Be Wild by Steppenwolf.

Damages are being sought for 500 songs which would amount to $75 million.

And so, this chapter could go on and on. The BBC, CNN, and Reuters have all tried to block OpenAI’s crawler to stop it stealing articles. Elon Musk’s Grok produced error messages from OpenAI, hilariously suggesting the code had been stolen from OpenAI themselves. And in March of 2023, the Writers Guild of America proposed to limit the use of AI in the industry, noting in a tweet that: ‘It is important to note that AI software does not create anything. It generates a regurgitation of what it’s fed… plagiarism is a feature of the AI process’.

Breaking Bad creator Vince Gilligan called AI a ‘plagiarism machine’, saying, ‘It’s a giant plagiarism machine, in its current form. I think ChatGPT knows what it’s writing like a toaster knows that it’s making toast. There’s no intelligence — it’s a marvel of marketing’.

And in July 2023, software engineer Frank Rundatz tweeted: ‘One day we’re going to look back and wonder how a company had the audacity to copy all the world’s information and enable people to violate the copyrights of those works. All Napster did was enable people to transfer files in a peer-to-peer manner. They didn’t even host any of the content! Napster even developed a system to stop 99.4% of copyright infringement from their users but were still shut down because the court required them to stop 100%. OpenAI scanned and hosts all the content, sells access to it and will even generate derivative works for their paying users’.

I wonder if there’s ever, in history, been such a high-profile startup attracting so many high-profile lawsuits in such a short amount of time. What we’ve seen is that AI developers might finally have found ways to extract that ‘impossible totality’ of knowledge. But is it intelligence? It seems, suspiciously, to not be found anywhere in the AI companies themselves, but from around the globe; in some senses from all of us. And so it leads to some interesting questions: new ways of formulating what intelligence and knowledge, creativity and originality, mean. And then, what that might tell us about the future of humanity.

Copyright, Property, and the Future of Creativity

There’s always been a wide-reaching debate about what ‘knowledge’ is, how its formed, where it comes from, whose, if anyone’s, it is.

Does it come from God? Is it a spark of individual madness that creates something new? Is it a product of institutions? Collective? Or lone geniuses? How can it be incentivised? What restricts it?

It seems intuitive that knowledge should be for everyone. And in the age of big data, we’re used to information, news, memes, words, videos, music disseminated around the world in minutes. We’re used to everything being on demand. We’re used to being able to look anything up in an instant.

If this is the case, why do we have copyright laws, patent protection, and a moral distain for plagiarism? After all, without those things knowledge would spread even more freely.

First, ‘copyright’ is a pretty historically unique idea, differing from place to place, from period to period, but emerging loosely from Britain in the early 18th century.

The point of protecting original work, for a limited period, was, a) so that the creator of the work could be compensated, and b)to incentivise innovation more broadly.

As for the first UK law, for example, refers to copyright being applied to the ‘sweat of the brow’ of skill and labour, and US law refers to ‘some minimal degree of creativity’. It does not protect ideas, but how they’re expressed.

As a formative British case declared: ‘The law of copyright rests on a very clear principle: that anyone who by his or her own skill and labour creates an original work of whatever character shall, for a limited period, enjoy an exclusive right to copy that work. No one else may for a season reap what the copyright owner has sown’.

As for the second purpose of copyright – to incentivise innovation – the US constitution grants the government the right, ‘to promote the Progress of Science and useful Arts, by securing for limited Times to Authors and Inventors the exclusive Right to their Writings and Discoveries’.

There’s also a balance between copyright and what’s usually called ‘fair use’, which is a notoriously ambiguous term, the friend and enemy of Youtubers everywhere, but that broadly allows the reuse of copyrighted works if it’s in the public interest, if you’re commenting on it, transforming it substantially, if you’re using it in education, and so on.

Many have argued that this is the engine of modernity. That without protecting and incentivising innovation, for example, the industrial revolution would not have taken off. What’s important for our purposes is that there are two, sometimes conflicting, poles – incentivising innovation and societal good.

All of this is being debated in our new digital landscape. But what’s AI’s defence? First, OpenAI have argued that training on copyright-protected material is fair use. Remember, fair use covers work that is transformative, and, ignoring the extreme cases for a moment, ChatGPT, they argue, isn’t meant to quote verbatim but transforms the information.

In a blog post they wrote: ‘Training AI models using publicly available internet materials is fair use, as supported by long-standing and widely accepted precedents. We view this principle as fair to creators, necessary for innovators, and critical for US competitiveness’.

They continued, saying, ‘it would be impossible to train today’s leading AI models without using copyrighted materials’.

Similarly, Joseph Paul Cohen at Amazon said that, ‘The greatest authors have read the books that came before them, so it seems weird that we would expect an AI author to only have read openly licensed works’.

This defence also aligns with the long history of the societal gain side of the copyright argument.

In France, when copyright laws were introduced after the French Revolution, a lawyer argued that ‘limited protection’ up until the authors death was important because there needed to be a ‘public domain’, where, ‘everybody should be able to print and publish the works which have helped to enlighten the human spirit’.

Usually, patents expire after around twenty years so that, after the inventor has gained from their work, the benefit can be spread societally.

So the defence is plausible. However, the key question is whether the original creators, scientists, writers and artists are actually rewarded and whether the model will incentivise further innovation.

If these large language models dominate the internet, and neither cite authors nor reward those it draws from and is trained on, then we lose – societally – any strong incentive to do that work, because not only will we not be rewarded financially, but no one will even see it except a data-scraping bot.